Implementing eOSCE During COVID-19 Lockdown

Elham Alshammari

Department of Pharmacy Practice, College of Pharmacy, Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

ABSTRACT

Assessment is an essential component of the curriculum. When used in the right manner, it can help accomplish certain curricular goals. In medical education, assessment of clinical competence has experienced many changes in recent times, leading to the development of some newer modalities of assessment. One such method of assessment used in recent times is the Objective Structured Clinical Examination, which guarantees better quality when compared to conventional clinical examination. Traditional OSCEs, however, have some shortcomings. When implemented in learning institutions with many students and inadequate time for observing and marking the performance of learners, this tool provides little opportunity for examiners to provide well-timed and individualized feedback. At the same time, universities are experiencing uncertain times with the ongoing COVID-19 pandemic affecting assessment modalities. It is, therefore, believed that improved efficiency in evaluation and provision of customized student feedback can be achieved through computerized or electronic marking systems, a case in the example being the eOSCE. In this regard, the objective of the study was to examine the implementation of eOSCE during COVID-19 lockdown in Saudi universities. The study used a sample of pharmacy faculty and examined their experience and satisfaction towards the implementation of eOSCE as a potential replacement of OSCE during university lockdown. All the study participants (100%) agreed that eOSCE saved time; 62.5% noted that eOSCE was easy to use and grade; and 12.5% preferred not to give their opinion and resorted to remain “neutral”. In sum, the study shows that eOSCE is effective in facilitating the assessment process and can be used effectively during certain and uncertain times.

Keywords: Objective Structured Clinical Exams, OSCE, Pharmacy, Remote exam, University lockdown

Introduction

Practical professional competency testing is an essential part of the curriculum [1]. When used properly, this component can help realize the primary goals of curricular. Without testing, learning is less likely to be comprehensive and students are likely to be committed less to the learning process.[2] In the field of medical education, assessment practices have undergone numerous changes over the years [3, 4]. Some of the fresher modalities of assessment proposed in recent years are considered to be of higher quality than earlier ones. Generally, good assessment practice should demonstrate objectivity, reliability, structure, validity evidence, validity, as well as defensibility.[2] An example of a testing approach that meets these criteria is the Objective Structured Clinical Examination (OSCE), which has become more credible in the contemporary society due to its better quality of assessment when compared to conventional clinical examination.[2]

What is OSCE?

Since the original OSCE was developed, various definitions have since come up. Harden and his colleagues first introduced OSCE in the mid-1970s and defined this technique as “An approach to the assessment of clinical competence in which the components are assessed in a planned or structured way with attention being paid to the objectivity of the examination.”[5] OSCE has also been described as an approach to clinical examination often used in the clinical sciences, such as nursing, medicine, dentistry, pharmacy, and radiography among other disciplines.[2] A consolidated definition in a recent study viewed OSCE as “An assessment tool based on the principles of objectivity and standardization, in which the candidates move through a series of time-limited stations in a circuit for assessment of professional performance in a simulated environment. At each station candidates are assessed and marked against standardized scoring rubrics by trained assessors.”[5]A few variants of OSCE exist such as Objective Structured Practical Examination (OSPE), Objective Structured Assessment of Technical Skills (OSATS), Objective Structured Video Examinations (OSVE), and Team Objective Structured Clinical Examination (TOSCE).[5] These variants use OSCE’s original format of moving around assessment stations to examine various learning outcomes.

The approach has often been used to examine the performance of clinical skills and competence in such aspects as, clinical examination, history taking, medical procedures, communication, interpretation of medical tests, prescription, and clinical psychomotor skills among others.[2, 5] The structure of OSCEs includes Standardized Patients (SP) meant to enhance the psychometric properties of the assessment approach.[2] Besides, the convenience of the Clinical Skills Laboratory makes it easier to use OSCE as a training and assessment tool in the field of medical education.[2]

In terms of functionality, the OSCE fulfills its role as follows. The clinical assessment is timed, and students are required to move from one station to another. At each station, students are required to perform certain clinical tasks in a simulated setting that entails interaction with real or regulated patients. A single OSCE examination may include about 10-25 stations, with each requiring between 5-20 minutes depending on the aspects being put to the test, as well as the level and the depth of the assessment. Since the assessment involves a group of stations, it can as well be viewed as a circuit. The stations may require students to participate in such activities as history taking, counseling, physical examination, post-encounter examination questions, case summaries, laboratory result interpretations, procedural skills, instrument identification, as well as viva voce among other tasks. One or two examiners supervise each station, and their role is to examine how the students perform the necessary tasks. The examiners also award marks to the students based on preset and verified criteria. During an OSCE examination, the students are required to rotate through the stations and complete all the tasks in the circuit. In this manner, all the students engage with similar stations and are examined on the same tasks.[2] OSCE can evaluate skills in all three domains of learning – affective, cognitive, and psychomotor. However, it should be noted that there are more suitable methods for examining such skills. Besides, it is not viable to examine all the levels of difficulty in each of the three domains using an OSCE, a case in the example being a situation where students are required to evaluate knowledge in a cognitive domain.[5]

There are certain educational principles associated with OSCE. Two major ones include ‘objectivity’ and ‘structure.’ The principle of objectivity relies on the use of regulated scoring rubrics, where examiners ask related questions to each student. OSCE often uses a well-structured and standardized station intended to evaluate a certain clinical task that is outlined against the curriculum.[5] For an OSCE to be considered to have a high level of validity, it must deal with what it is designed to address. The content of the test must represent what the curriculum needs to examine, the tasks must be accurate, and must assess the right domains. An OSCE must also record, handle, store, and analyze the responses to the test item accurately.[5] What’s more, the test results from OSCE must link with the outcome of other tests examining similar domains and show poor correlation with those examining a different domain.[5]

Apart from that, the OSCE must also prove to have a high degree of reliability, which means that the outcome of the examination must be reproducible with minimal quantities of error.[2, 5, 6] The key influences on the reliability of OSCE include the number of stations, regulated scoring rubrics, the use of trained examiners, and the use of regulated patient performance. OSCE tests that use a large sample of clinical cases often maximize reliability. [2, 5, 6] Regulated scoring rubrics mark students against the same criteria, thereby improving the uniformity of scoring. Moreover, training examiners reduces variations in scoring and improves the consistency in their behavior.

The OSCE has been used in various ways in the past in undergraduate and graduate examinations globally. The OSCE has also been used for licensure tests as well as a tool for feedback in the foundational settings. [2, 5, 6] Some of the popular applications of OSCE are as follows. First, it is used as a performance-based valuation tool for examining the bare minimum standards of students during undergraduate years in a majority of the medical schools in the US, UK, and Canada.[5] Next, OSCE is used as a graduate high stakes evaluate tool in Royal College examinations. It is also used as a formative evaluation tool in undergraduate medical education, as well as a tool for providing instant feedback. Also, some institutions use OSCE to assess graduates in quest of high-stakes licensure as well as certification to practice medicine.[5]

Traditional OSCEs, however, have some shortcomings. When implemented in learning institutions with many students and inadequate time for observing and marking the performance of learners, this tool provides little opportunity for examiners to provide well-timed and individualized feedback.[6] Possibly, this explains why most students receive delayed feedback from OSCEs. The late feedback frustrates most learners and often leads to a negative impact on the learning process. Moreover, traditional OSCEs provide room for the inconsistency of assessment among examiners.[6] Additional errors may also occur when data is shifted from paper to digital mediums for purposes of putting together student marks. Notably, educators need many resources to use traditional OSCE methods, most of which can become difficult to oversee in larger groups of learners. These challenges are compounded by the scarcity of health professionals worldwide versus the need to educate more students. As such, there is a need to establish more efficient and valuable tactics for examining the practical clinical skills of learners.[6]

Improved efficiency in the evaluation and provision of customized student feedback can be achieved through computerized or electronic marking systems.[6] One such system would be the “eOSCE”, an electronic medium designed to help in the assessment of practical skills. With eOSCE, examiners can use an iPad that allows them to document their comments directly, thereby reducing possible errors and post-examination workload correlated with moving marks into electronic files. An electronic system can provide students with direct and immediate feedback from the examiner.[6, 7]

During COVID-19 lockdown, all the Saudi universities shifted to running lectures and tests remotely. Based on this switch, the eOSCE has been considered a potential replacement for the traditional-OSCE. The proposed electronic approach would incorporate a blackboard audio response feature with an in-built rubric for grading. The prevailing assumption is that such an approach will help minimize workloads after an examination. With these benefits in mind, this paper sought to examine the implementation of eOSCE during COVID-19 lockdown in Saudi universities.

Previous Studies on OSCE

There exists a considerable body of literature on OSCE and its ability to facilitate standard assessment. One study tested an OSCE consisting of 22 stations using a sample of 67 students in their final year.[8] The authors designed the assessment to incorporate all the domains of learning, including cognitive, psychomotor, and effective. The outcome of the study showed that OSCE was an appropriate approach that exhibited a high construct validity. Statistical findings showed a significant correlation between the score of the station and the overall examination score for the 19 stations. The authors found the reliability of the OSCE test was found to be 0.778. besides, students and examiners were highly satisfied with the format of assessment. These findings imply that OSCE is a suitable tool for achieving standardized assessments. Besides, the findings demonstrate that the validity and reliability of OSCE can be enhanced by incorporating various modalities.[8]

Another study also evaluated the suitability of OSCE among undergraduate students pursuing accident and emergency medicine.[9] The authors introduced an OSCE for fourth-year medical students towards the end of their accident and emergency medical attachment. The structure of the OSCE included 10 examination stations, whereby participants were required to rotate around each in 3-min intervals. The outcome showed that medical students and their examiners found OSCE satisfactory in the assessment process. The marks attained on the OSCE exam had a significant correlation with the independent rating of the ability of the students. The format of the OSCE was considered appropriate to accident and emergency medicine since it would have been impractical for the participants to take part in conventional clinical exams.[9]

Equally, a study in this domain evaluated the feasibility of OSCE in the assessment of competency among undergraduate medical students.[10] Two independent coders applied the Best Evidence Medical Education methodology to 1083 studies selected through a literature search spanning 1975 to 2008. The study findings revealed that OSCE was a suitable tool for examining clinical competence in differing contexts. The study findings also revealed that OSCE could be used to examine different learning outcomes; facilitate both summative and formative purposes in different disciplines and specialties; and examine curriculum or educational interventions. The findings also revealed that this form of assessment could be used in different professions in the health care field. These findings imply that the use of OSCE guarantees reliable results, and is flexible in the number of students assessed, the number of examiners included, types of patients represented, and the format of examination.[10]

Some authors have also examined the perception of learners concerning the benefits of OSCE.[11] The authors delivered an electronic questionnaire to 34 German medical schools and invited learners in years 3-6 to rate the goals of effective medical assessment. OSCE was measured against a 5-point Likert Scale, and factor analysis used to detect the basic components in the ratings. Factor analysis revealed that the key elements of the effective medical assessment for OSCE were the educational impact and clinical competence. Overall, the outcome of the study also revealed that students considered OSCE to be a valuable assessment for the purpose it was designed to serve.[11]

Focus on the OSCE is also evident in another study evaluating the attitudes and the perceptions of 1st and 2nd medical students.[12] 1280 students participated in the OSCE and an additional questionnaire examining their views concerning the OSCE. The study findings revealed that most students supported the introduction of an OSCE to the list of other current assessments. The study also revealed additionally on OSCE as an experience and process since it fostered feelings of responsibility as well as identity. Overall, the outcome of the study supports the introduction of OSCE early on in the medical course due to the numerous benefits reported.[12]

Previous studies on OSCE demonstrate that electronic systems are effective and favored by educators compared to traditional approaches. For instance, one study used a sample of dermatology undergraduate students to evaluate computer-assisted OSCE.[13] The authors developed and introduced CA-OSCE as a method for posting assessment. The authors compiled the average attendance and assessment scores for the learners undergoing CA-OSCE. The findings revealed the average attendance scores as 83.36% and the median assessment scores as 77.47%, compared to 64.09% and 52.07% for last year learners. Based on the study findings, the authors found the difference between the two groups to be statistically significant. Students also demonstrated high acceptability of CA-OSCE, and their feedback was encouraging. These study findings imply that CA-OSCE is an important tool for evaluating dermatology undergraduates and can encourage them to attend regularly.[13]

Another study also used dermatology undergraduate trainee students to compare CA-OSCE and OSCE as tools of assessment.[14] The two tools of assessment were used as an end of posting assessment. The attendance and marks in both assessments were recorded carefully and analyzed with the help of SPSS. The authors also recorded feedback from the learners and the examiners. The study findings showed high reliability for both tests.[14] However, CA-OSCE was found to be more valid, reliable, as well as effective for dermatology assessment. Most examiners preferred using CA-OSCE rather than the traditional OSCE tool. However, the findings of the study were limited based on the small sample size used by the authors.[14]

An additional study tried to validate the use of eOSCE in a study sample of undergraduate dermatology students. A sample of fifth-year medical students was used in the study.[15] The scores of the learners in the electronic OSCE showed a positive correlation with those on the clinical presentation. The eOSCE also had an outstanding correlation with the general scores of the learners, demonstrating that the electronic approach is reliable in assessing dermatology.[15] Notably, the students returned positive feedback on the two assessment methods.

Materials and Methods

The objective of the current study was to examine the experience and satisfaction of pharmacy faculty towards the implementation of eOSCE as a potential replacement of OSCE during university lockdown. The study featured an examiner questionnaire with questions evaluating their eOSCE experience as well as their satisfaction through the blackboard method. The responses obtained from the questionnaire were evaluated using a five-level Likert scale. The Likert scale ranged from 1 denoting “strongly disagree” to 5 symbolizing “strongly agree.” Following this, the examiners were also asked a series of questions which entailed commenting on the merits and demerits of eOSCE. The participants were also asked to provide suggestions for potential examiners. A description of the participants and the process used to sample and select them is detailed as follows.

Selection and description of participants

Considering the objective of the study, the author followed a prospective study design. Nine instructors were selected to conduct eOSCE for the first time in their job. The study was set remotely at a pharmacy college – Princess Nourah bint Abdulrahman University (PNU). All the study participants had served as examiners in at least three traditional OSCE tests.

Results and Discussion

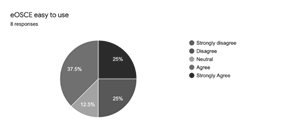

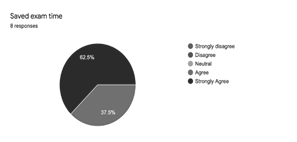

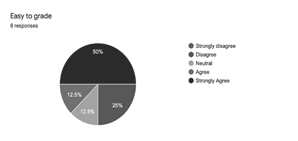

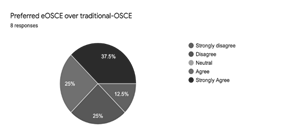

Eight of nine examiners voluntarily participated in an online survey. A summary of the study findings is as follows. All the study participants (100%) agreed that eOSCE saved time; 62.5% noted that eOSCE was easy to use and grade; and 12.5% preferred not to give their opinion and resorted to remain “neutral”. Participants who considered the electronic form of assessment to be easy to use and grade also preferred eOSCE over traditional OSCE. 37.5% of the participants did not like the electronic form of assessment. The key advantages of eOSCE as an alternative to traditional as noted in the study were as follows: faster, easy to track and re-hear, flexible timing when it comes to grading, paperless through the use of a build-in rubric, and ability of the students to take an exam at the same time. The study also identified disadvantages such as the risk of facing technical issues which may be handled through preparing multiple models to retake the exam. Other demerits include the double-time needed to plan for an online event. Lastly, some examiners agreed that eOSCE can be made cheating-free by integrating audio and video recording. Apart from these concerns, the findings revealed that eOSCE was great to implement in difficult situations, such as in the case of a lockdown. However, the approach can also be applied after the full re-opening of learning institutions. A summary of these findings is as shown in Figures 1, 2, 3, and 4 below.

Figure 1. Responses on the Ease of Use of eOSCE

Figure 2. Responses on Saved Exam Time Resulting From eOSCE Use

Figure 3. Responses on Easy to Grade as Pertains to eOSCE

Figure 4. Responses on Preference of eOSCE over traditional-OSCE

These findings are consistent with some recent studies [13-15] that have shown that eOSCE is effective in facilitating the assessment process. The current study agrees with these past scholarly works to the point that electronic OSCE offers numerous advantages in terms of organization, administration, and evaluation. With traditional OSCE, it is usually difficult to arrange large numbers of students. [13-15] The format of eOSCE effectively manages this challenge with most of the organization done digitally. Unlike OSCE which requires a lot of time to administer [13-15] electronic OSCE requires much less time since all the learners are given a similar set of instructions and a standard set time limit to respond to the questions. Besides, clarification sort by one student is also given to the rest of the group at the same time. [13, 14] To some extent, these benefits show that electronic OSCE can be a reliable option during university lockdown. Lastly, the format of eOSCE is objective and organized in a structured manner. Marks allotted for each activity are often indicated in electronic format, making the process of evaluation much easier. Due to this, it is also believed that the implementation of eOSCE during university lockdown would promote justice and fairness towards the students since the electronic medium reduces subjectivity issues.

Despite the positive contributions this paper makes to research literature, it is limited to some extent. There is no clear indication from the study whether eOSCE can examine the cognitive, affective, and psychomotor domains. Besides, the study does not consider an examiner’s attitude, their method of evaluation, and how they carry themselves when using eOSCE. Despite these shortcomings, the use of electronic OSCE appears to be a suitable means of ensuring students receive quality instruction and training during certain and uncertain times.

Conclusion

The paper sought to examine the implementation of eOSCE during COVID-19 lockdown in Saudi universities. The study featured an examiner questionnaire with questions evaluating their eOSCE experience as well as their satisfaction with the electronic medium delivered through the blackboard method. Although the sample is limited and experiences obtained only from eight examiners, the findings and suggestions obtained from this study may help decision-makers at each university in Saudi Arabia and other parts of the world to incorporate this type of testing in course assessments.

Conflicts of Interest

The author has declared that no competing interests exist.

Ethics Approval

Institutional review board IRB log number with KACST, KSA: 20-0188.

Acknowledgment

This research was funded by the Deanship of Scientific Research at Princess Nourah bint Abdulrahman University through the Fast-track Research Funding Program.

References

- Gholami M. Evaluating the Curriculum for BS of Radiologic Technology in Iran: An International Comparative Study. Entomol. appl. sci. lett. 2018;5(3):48-59.

- Onwudiegwu U. OSCE: Design, development, and deployment. Journal of the West African college of surgeons. 2018; 8 (1): 1-2

- Taheri A, Torabi M, Honarmand FM, Taheri S. Assessment Of Medical University Students’ knowledge Toward Dental Caries Prevention. Ann. Dent. Spec. 2018; 6(2):164-8.

- Alzahrani S, Alosaimi M, Malibarey WM, Alhumaidi AA, Alhawaj AH, Alsulami NJ, Alsharari AS, Alyami AA, Alkhateeb ZA, Alqarni SM, Khalil DA. Saudi Family Physicians' Knowledge of Secondary Prevention of Heart Disease: A National Assessment Survey. Arch. Pharm. Pract. 2019; 10(4):54-60.

- Khan KZ, Ramachandran S, Gaunt K, Pushkar P. The Objective Structured Clinical Examination (OSCE): AMEE guide no. 81. Part 1: An historical and theoretical perspective. Medical Teacher. 2013; 35 (9): e1437-e1446.

- Snodgrass SJ, Ashby SE, Rivett AD, Russell T. Implementation of an electronic Objective Structured Clinical Exam for assessing practical skills in pre-professional physiotherapy and occupational therapy programs: Examiner and course coordinator perspectives. Australasian Journal of Educational Technology. 2014; 30(2): 152-16

- Majumder MAZ, Kumar A, Krishnamurthy K, Ojeh N, Adams OP., Sa B. An evaluation study of objective structured clinical examination (OSCE): students and examiners perspectives. Advances in Medical Education and Practice. 2019; 10; 387-39

- Bhatnagar KR, Saoji VA, Banerjee AA. Objective structured clinical examination for undergraduates: Is it a feasible approach to standardized assessment in India? Indian Journal of Ophthalmology. 2011; 59(3); 211-214.

- Johnson G., Reynard K. Assessment of an objective structured clinical examination (OSCE) for undergraduate students in accident and emergency medicine. Journal of Accident and Emergency Medicine. 1994; 11: 223-226.

- Patricio MF, Juliao M, Fareleira F, Carneiro AV. Is the OSCE a feasible tool to assess competencies in undergraduate medical education? Medical Teacher. 2013; 35(6): 503-514.

- Muller S, Settmacher U, Koch I., Dahmen U. A pilot survey of student perceptions on the benefit of the OSCE and MCQ modalities. GMS Journal for Medical Education. 2018; 35(4): 1-13.

- Furmedge DS, Smith L, Sturrock A. Developing doctors: What are the attitudes and perceptions of year 1 and 2 medical students towards a new integrated formative objective structured clinical examination? BMC Medical Education. 2016; 16(32): 1-9.

- Grover C, Bhattacharya SN, Pandhi D, Singal A, Kumar P. Computer-assisted Objective Structured Clinical Examination: A useful tool for dermatology undergraduate assessment. Indian Journal of Dermatology, Venereology, and Leprology. 2012; 78(4): 519.

- Chaundary R, Grover C, Bhattacharya SN, Sharma A. Computer-assisted Objective Structured Clinical Examination versus Objective Structured Clinical Examination in assessment of dermatology undergraduate students. Indian Journal of Dermatology, Venereology, and Leprology. 2017; 83(4): 448-452.

- Kaliyadan F, Khan AS, Kuruvilla J, Feroze K. Validation of a computer-based objective structured clinical examination in the assessment of undergraduate dermatology courses. Indian Journal of Dermatology, Venereology, and Leprology. 2014; 80(2): 134-136.

Contact Meral

Meral Publications

www.meralpublisher.com

Davutpasa / Zeytinburnu 34087

Istanbul

Turkey

Email: [email protected]